Peter Hicks

At oleander, we work extensively with Apache Spark and Apache Iceberg in our data observability platform. This means we're constantly dealing with Parquet files - they're the backbone of modern data lakes and analytical workloads. Whether it's tracking lineage metadata from Spark jobs or analyzing Iceberg table snapshots, Parquet files are everywhere in our data infrastructure.

Our Goals

- Fast: Quick loading and navigation through large files with millions of rows using scroll virtualization (because nobody has the time to crash their browser on our website)

- Traditional database-like filtering: Filtering, sorting, and paging that actually works across the entire dataset, not just the current page

Our not Goals

- Server-side processing: We don't want to see your data, because your data is your business

- Complex user experience: We don't want users to need a PhD in SQL or remember how to sort on multiple columns

v1: HyParquet & Page Caching

Our first implementation used HyParquet, a pure JavaScript Parquet reader. This project helped us flesh out the virtualization and interface that we wished to work with. It has the ability to page, but the filtering and sorting capabilities are limited and inefficient to run across columns in TypeScript.

So unfortunately this implementation can't help you find all the books in a library where the main character's name starts with "J".

v1 Limitations

- No SQL-like capabilities: Could only read data sequentially, no filtering or sorting

- Performance bottlenecks: Large files with many columns could be slow

- No aggregation: Couldn't perform basic analytics on the data

v2: DuckDB WebAssembly in the Browser

DuckDB via WebAssembly really has bailed us out here since it allows us to just run the SQL that everyone loves to hate directly in the browser.

Why DuckDB?

DuckDB brought several advantages that made us question why we didn't start here:

- Full SQL support: Filter, sort, aggregate, and analyze data with SQL, with possibilities for enhancements

- Optimized Parquet reading: Built-in, highly optimized Parquet support

- Performance: Significantly faster, at least it seems so without knowing how to do benchmarks

DuckDB Implementation

// Lazy load and initialize DuckDB with WebAssembly

const JSDELIVR_BUNDLES = duckdb.getJsDelivrBundles();

const bundle = await duckdb.selectBundle(JSDELIVR_BUNDLES);

const worker_url = URL.createObjectURL(

new Blob([`importScripts("${bundle.mainWorker}");`], {

type: "text/javascript",

}),

);

const worker = new Worker(worker_url);

const logger = new duckdb.ConsoleLogger();

const db = new duckdb.AsyncDuckDB(logger, worker);

await db.instantiate(bundle.mainModule, bundle.pthreadWorker);

The DuckDB implementation works by:

- Loading the file: Reading the Parquet file into DuckDB's virtual file system (like having a magical filing cabinet that can hold infinite files)

- Creating a table: Using

CREATE TABLE ... AS SELECT * FROM parquet_scan(...) - Schema discovery: Using

DESCRIBEto get column information - SQL-based operations: All filtering, sorting, and pagination via SQL queries

Under the Covers

The DuckDB implementation lets us craft SQL expressions:

Filtering

const whereConditions: string[] = [];

Object.entries(filters).forEach(([column, value]) => {

if (value.trim()) {

const columnInfo = schema?.find((col: any) => col.column_name === column);

const columnType = columnInfo?.column_type?.toUpperCase();

if (

columnType &&

(columnType.includes("VARCHAR") || columnType.includes("TEXT"))

) {

whereConditions.push(`${column} ILIKE '%${value}%'`);

} else if (

columnType &&

(columnType.includes("DOUBLE") || columnType.includes("INTEGER"))

) {

whereConditions.push(`CAST(${column} AS VARCHAR) ILIKE '%${value}%'`);

}

}

});

Column Sorting

if (sorting) {

query += ` ORDER BY ${sorting.column} ${sorting.direction.toUpperCase()}`;

}

Paging

const offset = pageNumber * pageSize;

query += ` LIMIT ${pageSize} OFFSET ${offset}`;

Future Possibilities

The DuckDB foundation opens up exciting possibilities:

- History: Save and replay localized states

- Data export: Export filtered/sorted data to CSV or JSON

- Visualization: Column distribution graphs of values in headers like Hugging Face does

- Diff Comparison: Compare data across multiple Parquet files

Let us know what you think

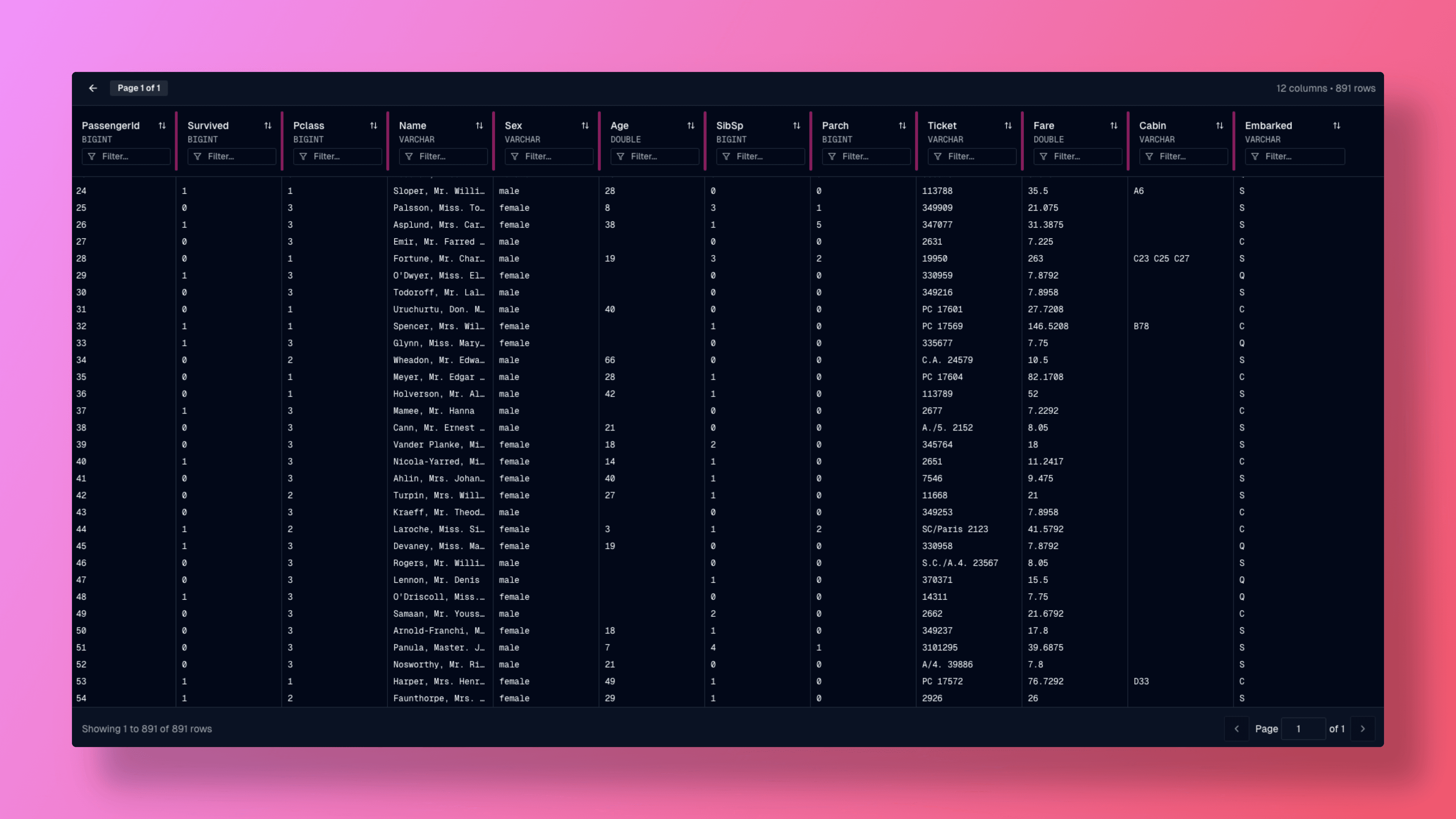

Our tool is available for everyone here. Try it out, break it (let us know what's wrong), and let us know what you think.